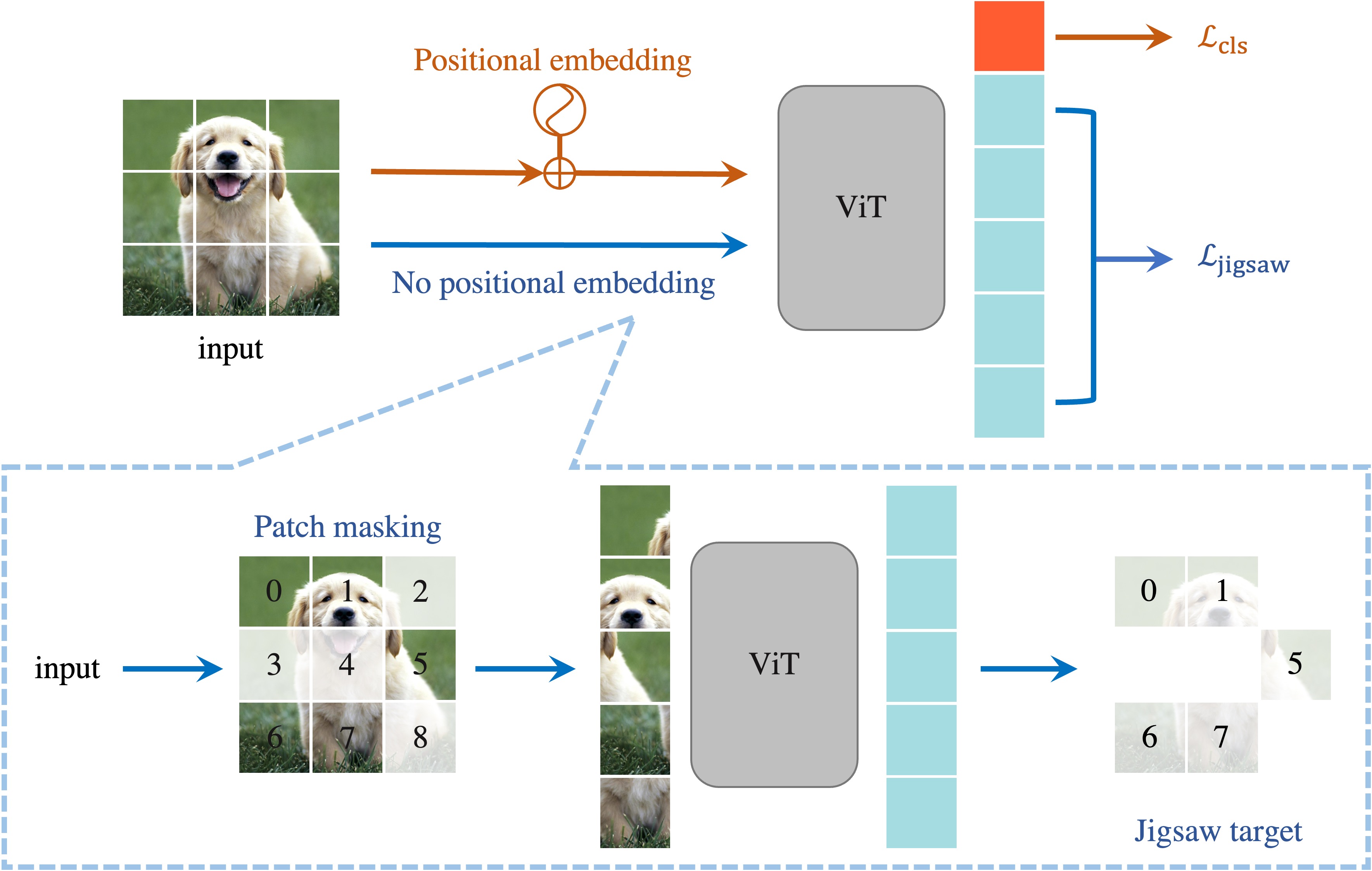

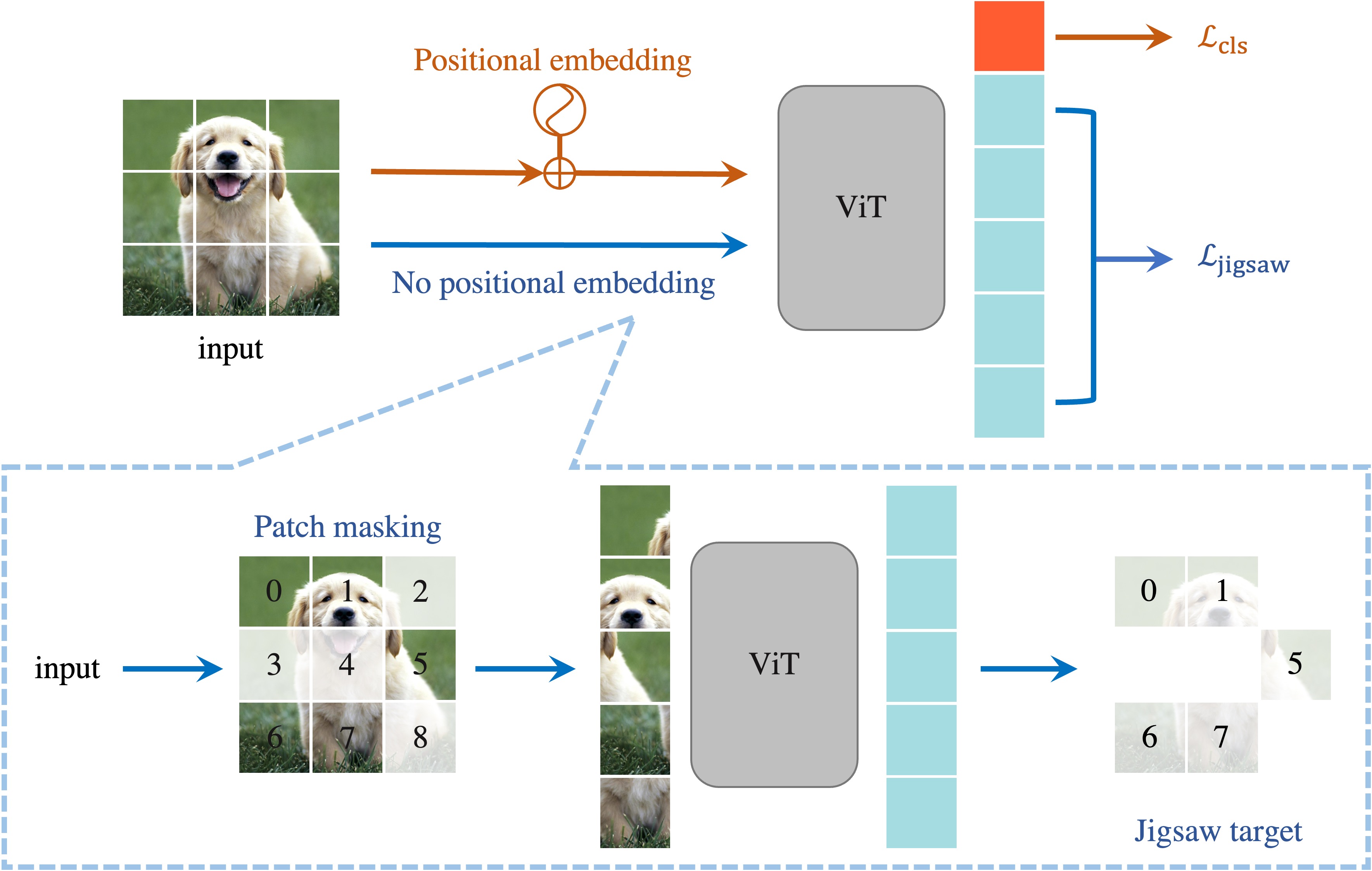

Overview framework of our Jigsaw-ViT.

(Top) We incorporate jigsaw puzzle solving (in blue flow) into the standard ViT for image classification (in red). During the training, we jointly learn the two tasks.

(Bottom) The details of our jigsaw puzzle flow. We drop several patches, i.e., patch masking, and remove positional embeddings before feeding to ViT. For each unmasked patch, the model should predict the class corresponding to the patch position.

Abstract

The success of Vision Transformer (ViT) in various computer vision tasks has promoted the ever-increasing prevalence of this convolution-free network.

The fact that ViT works on image patches makes it potentially relevant to the problem of jigsaw puzzle solving, which is a classical self-supervised task aiming at reordering shuffled sequential image patches back to their natural form. Despite its simplicity, solving jigsaw puzzle has been demonstrated to be helpful for diverse tasks using Convolutional Neural Networks (CNNs), such as self-supervised feature representation learning, domain generalization, and fine-grained classification.

In this paper, we explore solving jigsaw puzzle as a self-supervised auxiliary loss in ViT for image classification, named Jigsaw-ViT. We show two modifications that can make Jigsaw-ViT superior to standard ViT: discarding positional embeddings and masking patches randomly. Yet simple, we find that Jigsaw-ViT is able to improve both in generalization and robustness over the standard ViT, which is usually rather a trade-off. Experimentally, we show that adding the jigsaw puzzle branch provides better generalization than ViT on large-scale image classification on ImageNet. Moreover, the auxiliary task also improves robustness to noisy labels on Animal-10N, Food-101N, and Clothing1M as well as adversarial examples.

Method

The overview framework of our approach is illustrated in the teaser image.

Precisely, we focus on image classification problem using ViT.

Our goal is to train a model that solves the standard classification and jigsaw puzzles simultaneously.

The total loss  is a simple weighted combination of two cross-entropy losses denoted by

is a simple weighted combination of two cross-entropy losses denoted by  s:

the class prediction loss on the

s:

the class prediction loss on the  token

token  and the position prediction loss on the patch tokens

and the position prediction loss on the patch tokens  :

:

}_{\mathcal{L}_{\text{cls}}} + \eta \underbrace{CE(\tilde{\mathbf{y}}_{\text{pred}}, \tilde{y})}_{\mathcal{L}_{\text{jigsaw}}})

where

and

denote position prediction and the corresponding real position, respectively, and

is a hyper-parameter to balance the two losses.

Our jigsaw puzzle flow is detailed in bottom part of the teaser image.

Specifically, we include two changes compared to naive jigsaw puzzle implementation:

(i) we get rid of positional embedding in the jigsaw puzzle flow, which provides explicit clues to the position prediction;

(ii) we randomly drop

patches in the input where

)

is a hyper-parameter denoting the mask ratio.

It can be apparently seen that both two strategies increase the difficulties in solving jigsaw puzzle,

which is demonstrated to be helpful for the main classification task (Section 4 in the paper).

Since the proposed jigsaw puzzle solving is a self-supervised task, it can be easily plugged into existing ViT without modifying the original architecture.

Results

Please refer to our paper for more experiments.

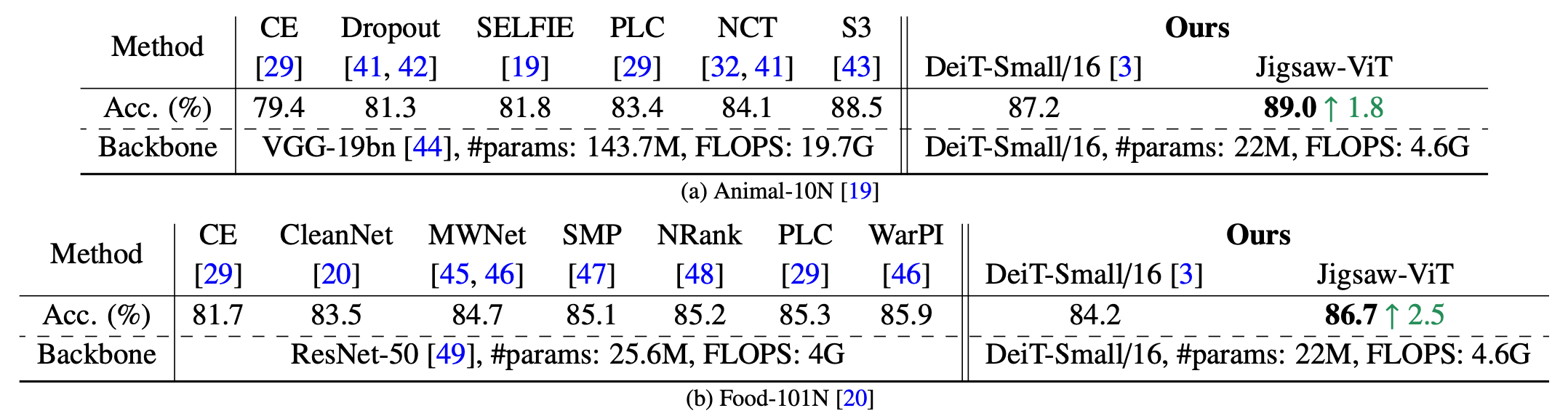

Robustness to label noise

Image classification on datasets with low noise rate

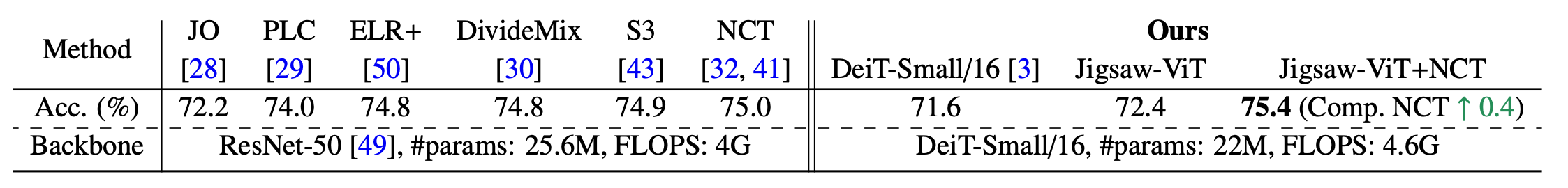

Image classification on datasets with high noise rate

Image classification on datasets with low noise rate.

We compare to state-of-the-art approaches and report test top-1 accuracy (%) on Animal-10N (noise ratio ~8%, in Table (a)) Food-101N (noise ratio ~20%, in Table (b)).

We also show how much Jigsaw-ViT model is above DeiT-Small/16 with ↑.

Image classification on datasets with high noise rate.

We compare to state-of-the-art approaches and report test top-1 accuracy (%) on Clothing1M (noise ratio ~38%).

We also show how much Jigsaw-ViT+NCT is above NCT with ↑.

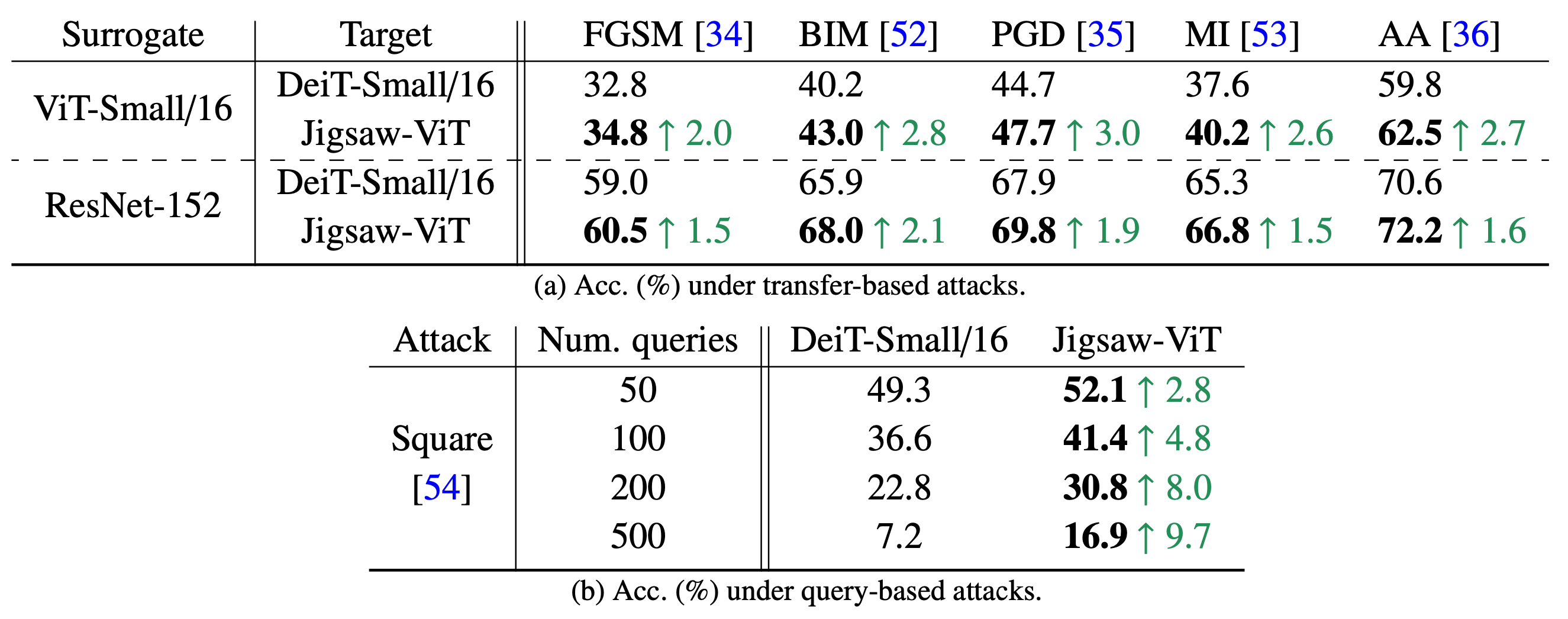

Robustness to adversarial examples

Robustness to adversarial examples in black-box settings

Robustness to adversarial examples in white-box settings

Robustness to adversarial examples in black-box settings.

We report top-1 accuracy (%) after attacks on ImageNet-1K validation set (higher numbers indicate better model robustness).

(a) Transfer-based attacks where adversarial examples are generated by attacking a surrogate model.

(b) Query-based attacks where adversarial examples are generated by querying the target classifier for multiple times.

We also show how much our Jigsaw-ViT model is above DeiT-Small/16 with ↑.

Robustness to adversarial examples in white-box settings.

We report top-1 accuracy (%) after attacks on ImageNet-1K validation set (higher numbers indicate better model robustness).

(a) White-box attacks with  -norm perturbation.

(b) White-box attacks with

-norm perturbation.

(b) White-box attacks with  -norm perturbation.

We also show how much our Jigsaw-ViT model is above DeiT-Small/16 with ↑.

-norm perturbation.

We also show how much our Jigsaw-ViT model is above DeiT-Small/16 with ↑.

Resources

BibTeX

If you find this work useful for your research, please cite:

@article{chen2022jigsaw,

author={Chen, Yingyi and Shen, Xi and Liu, Yahui and Tao, Qinghua and Suykens, Johan A. K.},

title={Jigsaw-ViT: Learning Jigsaw Puzzles in Vision Transformer},

journal={Pattern Recognition Letters},

volume = {166},

pages = {53-60},

year={2023},

publisher={Elsevier}

}

Acknowledgements

This work is jointly supported by EU: The research leading to these results has received funding from the European Research Council under the European Union's Horizon 2020 research and innovation program / ERC Advanced Grant E-DUALITY (787960). This paper reflects only the authors' views and the Union is not liable for any use that may be made of the contained information.

Research Council KU Leuven:

Optimization frameworks for deep kernel machines C14/18/068

Flemish Government:

FWO: projects: GOA4917N (Deep Restricted Kernel Machines: Methods and Foundations), PhD/Postdoc grant

This research received funding from the Flemish Government (AI Research Program).

EU H2020 ICT-48 Network TAILOR (Foundations of Trustworthy AI - Integrating Reasoning, Learning and Optimization)

Leuven.AI Institute